It isn’t an impossible task to monitor/evaluate (M&E) intangibles, knowledge or knowledge management (KM), but it requires a series of tough choices in a maze of possibles. This is what Simon Hearn and myself are discovering, trying to summarise, synthesise and build upon the two M&E of KM papers commissioned earlier, as well as the reflective evaluation papers by Chris Mowles.

We are still at the stage of struggling very much with how to set the ballpark for our study. So this is a good opportunity to briefly share a blogpost I wrote recently about this very topic, and to share some preliminary thoughts. If we get to engage your views it would certainly help us to get going.

In attempting to monitor knowledge and/or knowledge management, one can look at an incredible amount of issues. This is probably the reason why there is so much confusion and questioning around this topic (see this good blog post by Kim Sbarcea of ‘ThinkingShift’, highlighting some of these challenges and confusion).

With this confusion, it seems to me that if we want to make sense out of M&E of KM, we should look at factors that influence the design of M&E frameworks / activities, the approaches that result from it and finally the components that an M&E framework could touch upon.

Among the factors that seem to impact on the design (and later implementation) of M&E of KM, I would identify:

- The power play, influence, roles and responsibilities that precede M&E – let’s put this under a global ‘political economy‘ heading that includes: who finances / commissions / implements / contributes to / benefits from M&E of KM?

- The world view that dictates the design: from a theory of social learning to a cognitivist approach, what is shaping the view of knowledge management that one wants to monitor? This seems to have implications on the type of M&E approach and tools chosen. And perhaps it is at this junction that multiple knowledges are able to make the most serious contribution to the discussion?

- The purpose for carrying out M&E (obviously related to the above factors): accountability, learning, strategic reflection, capacity development etc. all these could also influence the choices made for a particular system or set of tools.

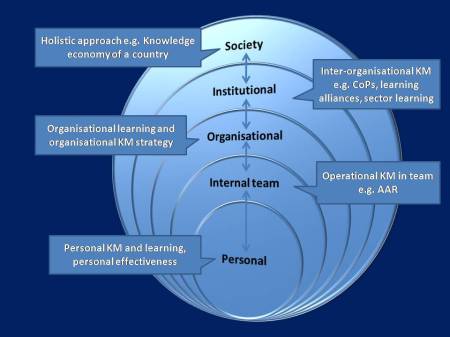

- The levels at which the unit observed operates, ranging from individual to societal, as described in the figure below…

Of course, in principle the knowledge management strategy (or overall strategy if there is no KM strategy in place) should influence choices to make but that kind of strategy itself would probably be subjected to the factors described above, so perhaps it is not a relevant factor.

The combination of the above is perhaps resulting in any combination of approaches on a spectrum that would span on the one end (in a caricatural way) very linear approaches to M&E of knowledge (management) and on the other end of the spectrum purely emergent approaches of ME of KM. Somewhere in the middle would be all the pragmatic approaches that combine elements from the two ends. If this holds a grain of truth, own observation is that the majority of development institutions seem to display one of these pragmatic in-between approach . So our spectrum could include:

- Linear approaches to monitoring of KM with a genuine belief in cause and effect and planned intervention;

- Pragmatic approaches to monitoring of KM, promoting trial and error and a mixed attention to planning (rather linearly) and observing how the reality affects the plan.

- Emergent approaches to M&E of KM, stressing natural combinations of factors, relational and contextual elements, conversations and transformations.

I forgot to mention in my original post that in spite of some wording, there is no implicit assumption that there is automatically one better end of the spectrum. They just differ in scope, ambition and applicability…

In the comparative table below I have tried to sketch differences between the three groups as I see them now, even though I am not convinced that in particular the third category is giving a convincing and consistent picture.

| Worldview | Linear approaches to M&E of KM | Pragmatic approaches to M&E of KM | Emergent approaches to M&E of KM |

| Attitude towards monitoring | Measuring to prove | Learning to improve | Letting go of control toexplore natural relations and context |

| Logic | What you planned – what you did – what is the difference? | What you need – what you do à what comes out? | What you do – how and who you do it with – what comes out? |

| Chain of key elements | Inputs – activities –outputs – outcomes – impact | Activities – outcomes – reflections | Conversations – co-creations – innovations –transformations – capacities and attitudes |

| Key question | How well? | What then? | Why, what and how? |

| Outcome expected | Efficiency | Effectiveness | Emergence |

| Key approach | Logical framework and planning | Trial and error | Experimentation and discourse |

| Attitude towards knowledge | Capture and store knowledge (stock) | Share knowledge (flow) | Co-create knowledge and apply it to a specific context |

| Component focus | Information systems and their delivery | Knowledge sharing approaches / processes | Discussions and their transformative potential |

| I, K or? What matters? | Information | Knowledge and learning | Innovation, relevance and wisdom |

| Starting point of monitoring cycle | Expect as planned | Plan and see what happens | Let it be and learn from it |

| End point of monitoring cycle | Readjust same elements to the sharpest measure(single loop learning) | Readjust different elements depending on what is most relevant(double loop learning) | Keep exploring to make more sense, explore your own learning logic(triple loop learning) |

The very practical issue of budgeting does not come in the picture here but it definitely influences the M&E approach chosen and the intensity of M&E activities.

Finally, in relation with all the above, it seems easier to explain how a certain M&E framework has been built and the components it assesses. In the table below I tried to list the most obvious components that an M&E framework could encompass, in terms of the following sequence:

- Inputs, as in the resources invested and starting point of monitoring: What areas influence knowledge management and ought to be assessed in terms of their potential to influence positively or negatively any knowledge management initiative or any initiative involving the management of intangibles?

- Throughputs, as in the processes, activities and energies that are involved in any KM initiative.

- Outputs, as in the results of KM initiatives.

| Input (resources and starting point) | Throughput (work processes & activities) | Output (results) |

| – People (capacities)- Culture (values)

– Leadership – Environment – Systems to be used – Money / budget |

– Methods / approaches followed- Relationships

– (Co-)Creation of knowledge – Rules, regulations, governance of KM – Learning/innovation space – Attitudes displayed |

– Creation of products & services- Appreciation of products & services

– Use/application of products & services – Behaviour changes: doing different things, things differently or with a different attitude – Application of learning (learning is fed back to the system) |

Do you have any suggestion about all this? Does it make any sense? Does it help? Is it complete nonsense? Any suggestion would be helpful to make this synthesis piece a little easier to unpack…

Filed under: IKM Emergent, knowledge management, Uncategorized | Tagged: IKM Emergent, intangible assets, knowledge management, M&E, monitoring, WG3, Working Group 3 |

Hi Ewen,

you have a tough task.

Before you can undertake evaluation you have to decide on how you will enquire into what it is you want to find out about. You have to take a view on research methods. In order to do this you will be required to further take a view as to which research methods to use based on what you are terming a world view (and what in philosophical terms would be theories of ontology and epistemology). That is, what we take to be reality and how we can know that reality. Valerie Brown wrote a helpful paper on research methods a while ago for the working group, although I can’t remember whether this strayed into questions of ontology/epistemology.

The reason this is difficult is that most people take for granted that their world view is shared by everyone else, or if they become aware that other people take a different world view from themselves, then they will fiercely stand their ground.

Very simplisitically a world view sits along a spectrum of realist/representationalist at one end of the spectrum and interpretivist at the other end of the spectrum. Another way of putting this is positivistic vs hermeneutic, scientific vs social contructionist. The former theories tend to borrow from natural science methods and the latter draw on methods concerned with relfection/reflexivity which problematise ‘reality’ towards developing more or less convincing interpretations.

A big debate has been going on for decades about the extent to which natural science methods apply in the social world.

So let’s look at positivism. It assumes that there is a real world out there, the individual cognising subject can perceive this reality which will be mirrored in his/her mind, and the thinking subject can develop methods of faithfully representing this mirroring of reality in ways which can be taken up by anyone else independent of context or time. In evaluation terms they can ‘prove’ whether something is successful or not. Natural science methods are the only methods which are scientific and therefore can be used inthe social world. So when your colleague Sbarcea talks about ‘measuring’ IKM in organisations he is automatically using the language of natural science, which I guess would put him at the positivist end of the spectrum. Evaluation methods towards the positivist end of the spectrum would rely more heavily on quantitative approaches since it is thought that there is little or no ambiguity around numbers. A realist evaluator would be likely to have structured questionnaires and to draw conclusions from the data without necessarily problematising the answers. Equally positivism is companionable with cognitivist psychology which assumes an atomised view of human knowing: an individual ‘has’ knowledge somehow locked up in their head which can be measured and known, or even made explicit at will.

At the other end of the spectrum you have a whole variety of social constructionists, hermeneuticists, structuralists and post structuralists who may (or may accept that there is a real world out there, but in any case humans make meaning of this world actively together. As Wittgenstein said, the meaning of the world does not lie in the world. So there are what we might term relativists, who think that all truths are equal (although there are not many of these) through to people who would term themselves post-foundationalists who think that there is a reality but we can only approximate it, getting nearer and nearer to the truth but never getting there. Some social sicentists deny that natural science methods can have anything to say about the social world because we are always interpreting what we think of as reality (Flyvbjorg) and that language is incredibly slippery. Some social scientists like Giddens sit on the fence saying that neither positivism and interpretation has a monopoly on the truth.

At the interpretative end of the evaluation spectrum you would find people who have limited patience with quantitative methods and who are likely to favour iterative rounds of meaning-making, perhaps drawing on empirical data as well, but largely favouring ethnomethodology, ethnography, particpant observation or some such. Interpretivists may well take an interest in intangibles such as power, relationships and social processes of knowing. So for example they would probably assume that there is no such thing as a knowing subject but that knowing is a process that arises in dynamic social relationships.

It would be my view that there is a dominant view of what evaluation should be, and that this tends towards the positivistic/realist end of the spectrum. This would certainly be true of at least one of the papers you are considering which unproblematically assumed that tacit knowledge can deliberately be made explicit by rational actors and that a monetary value could be placed on this. Most evaluations I have undertaken do assume a truth to be discovered and also assume a linear if-then causality discoverable by mostly natural science methods.This is the issue which I think IKME is contesting and is automatically problematised by the idea of ‘many knowledges’. For most natural scientists there is only one knowledge which counts.

If you read my papers I guess it will be obvious at which end of the spectrum i sit.

So I am not sure how helpful I found it to contrast linear with emergent ways of knowing with pragmatic somewhere in between. The opposite of linear is perhaps non-linear, and what you refer to as pragmatic might mean simply sitting on the fence. You are also using emergence in an a way that assumes it is the opposite of controlling: so you either control somethign or you ‘let it emerge’. But of course emergence is happening all the time whether you ‘let it’ or not..

Apologies if I am teaching my grandmother to suck eggs, and apologies also for crashing through all of this so fast and so simplistically.

Hope its helfpul anyway.

Chris

Hello Chris,

Thank you for your message and perspective. I have to admit that these philosophical references are indeed very useful for the paper that we will write and I was planning on referring to the positivistic-hermeneutic spectrum as well.

The paper itself will not be solely about the synthesis of the two papers (and your evaluation papers) but it will rather build upon them and identify key challenges, pointers, gaps for further research etc.

We will have to see how we go about this idea of linear / emergent perspectives. At any rate, the debate continues and more will follow on this blog in the new year.

For now, thank you very much again for your ideas and let’s continue this chat soon!

Cheers,

Ewen

Good luck Ewen!

Two books that I have come across recently might be helpful. One is called Reflexive Methodology by Alvesson and Skoldberg (2009), which, as the name suggests, develops a case for reflexivity in social research methods, but in doing so works across the spectrum from positivism, through cricial theory, to very reflexive/interpetative methods, so is useful for understanding the taxonomy of approaches. The other is The Oxford Handbook of Organization Theory edited by Tsoukas and Knudsen (2009). The first four essays entitled 1 Organization Theory as a Positive Science, 2 Organization Theory as an Interpretative Science, 3 Organization Theory as a Critical Science and 4 Organization Theory as a Post Modern Science invites adherents of each of these schools of thought to set out their position. It makes an interesting read to see the specturm of opions set alongside each other like this, although I personally think it stretches the term ‘science’ unless we take science to inlcude its non-linear mainfestations such as certain branches of complexity theory, for example.

Anyway, apologies for going on so and happy researching!

Best

Chris

Hello Chris,

Again, many thanks for pointing to these precious resources. I will read them and see how they guide our reflection around various approaches on M&E of KM.

Cheers and all the best in 2010!

Ewen

Fascinating discussion, and goes to the heart of how we are all interpreting the managment of knowledge – which is essential if we are going to monitor it!

I think all the themes in Chris Mowles contribution are crucial. I have thought that IKM-E has adopted a social constructivist perpspective but has not had the oppotunity to make it explicit – this is the opportunity.

In tackling wicked problems we argue that any transdisicplinary/ collective inquiry rests the particular interpretation of the 3 pillars of the construction of reality : Ontology (the way the world works) epistemology (the way knowledge/power is put together), and an ethic (a moral purpose). .

I have also commented on Simon’s Namibia piece – mostly by introducing the five Western knolwedge cultures and suggesting including what are often dismissed as intangibles: creativity, vision, purpose – although I think these are readily expressed as tangibles

That’s enough for now – but thanks Ewen for opening the batting!

Valerie

Dear Readers,

Nice horizons sketched by contributors, but back to the question: Monitoring knowledge (management): an impossible task? My answer would be no. For me any human activity for the betterment of humanity can be seen as a dialogue. Some dialogues stay oral and others refer to scriptures and rites, science and politics dialogue on models / descriptions / on forecasts / simulate / manage and bend reality 🙂 For me just knowledge sharing (KS) matters here, where knowledge sharing and information management (IM) add up to the knowledge management (KM) realm. Those dialogues – KS in situ – are process documented – right & wrong; good and bad; in audio, image and text – which results in information (a paper, papers, minutes, videos, manuals etc). Thus information does not result from data but from dialogue (fed with other information and some data). The linear data – information – knowledge – ???, wrong-foots us. The paradigm on that process – from dialogue to information – varies over time / space and used spectacles (going back to Plato, Aris and Confucius). When the process is not documented – as in the traditional knowledge transfer thinking: figure it out – tell them – next -, KS and IM are separated. Oral history is hard work! What results form dialogue is a good time (hopefully), a network (currently hype-sensitive), information, some capacity and over time skills and expertise. All can be ‘measured’, but we have to keep in mind that ‘to measure is to interfere’ (thank you Einstein), or ‘all is relative’ (thanks to Marx == ‘God is dead’) and systems evolution (thanks Darwin and John Gray). In our time information is food for the digits. All that can be packed in bits & bites becomes mash-up-able. One can agree on what indicates progress or success and how to measure on ‘good times’, networks, info and skills. To close the loop, information is version-ed and fed to dialogues. Of course the whole loop is prone to manipulation, corruption, clientèle-ism, but that’s life.

Hope this helps 🙂

Thank you Val and Jaap for your contributions!

A few bridges across your peninsula forepost in the sea of the unknown:

– Valerie, your case for adding creativity as a key intangible is a really interesting point. I would have focused mostly on the capacity to innovate at the level of an organisation/network but indeed it is crucial to tackle it at personal level and to value, encourage and support it at personal level indeed. All other contributions I already agreed with in previous conversations with you and I look forward to seeing them in the final version of this paper.

– Jaap, I think the idea of promoting dialogues as the key component for KM/KS is also totally justified and should make it to top recommendations.

– I do agree that to measure is to interfere and that not everything ought to be measured. However the reality of development in the current paradigm is one that favours normative assessments and we cannot come up with some work that is operating totally out of hands of the current donor mentality.

However, bridging the worlds of donors and that of aid workers who are trying out things (see the brilliant comment by Carl on Ben’s ‘Aid on the edge of chaos’ blogpost about social media and complexity: http://su.pr/2natGc). If we get these two communities to engage with one another we’re already a few inches further.

– One last thing: Definitely knowledge sharing is also for me the most powerful way of learning and getting onto new knowledge, but I wouldn’t dismiss information (management) because some people and some workers also make connections by just reading other information. The transformative potential of reading information is certainly less dynamic than that of sharing knowledge with someone else – because only one person i.e. one world view interprets information – but it still helps!

All in all, this conversation is really interesting and I look forward to commenting on as we are taking all your gems along!

Cheers,

Hi Ewen,

I do subscribe to the idea of information having transformative power / potential. I would like to include a picture here, but I do not know ho so I send it to your irc e-mail.

Best, Jaap

[…] exchange is currently happening on the Giraffe blog and on the KM4DEV mailing list. Our first blog post on the topic provides some ideas for a framework. More […]

[…] It’s quite interesting that this post is also reflected in the current discussion on monitoing and evaluation (M&E) of km which is taking place on The Giraffe: Monitoring knowledge (management): an impossible task. […]

Hi all,

Just a quick link to the presentation that Valerie mentioned in her comment.

It’s based on early conversations between Ewen and me.

Cheers, Simon

[…] Monitoring knowledge (management): an impossible task? […]

[…] (see for instance this and that post) and three on IKM-Emergent programme’s The Giraffe blog (see 1, 2 and 3). Simon Hearn, Valerie Brown, Harry Jones and I are on the […]

Hi Ewen,

Thank-you very much for this – although you have left me with a bit of a headache. I have a strong background in M&E so it is very difficult for me to step away from the linear approach but I think most organizations implementing projects should have an M&E/KM&L system that in between what you would refer to as pragmatic and emergent. In fact many donors are asking for a KM&L component to projects and programs.

I tell my KM course participates when thinking about M&E to ask themselves the following questions: Why are we sharing information/knowledge? What do we hope to do with new/relevant knowledge – generated or created? and What may change because of this (policies, procedures, products)? I tell them KM&OL leads to improved programs and services because participants are harnessing new knowledge to change what they are doing – they are always asking how something can be improved.

These changes will have an impact on the organization or the community and that is what should be measured. I found it easier to describe how KM influenced new decisions and in turn programmatic outcomes in a narrative form – what we were doing, what we learned, how we innovated/changed, and the impact.

Hello Jonathan, and thank you very much for sharing your perspective! I think we are at a crucial moment in the history of M&E (of KM and other things) in development because as you pointed out donors themselves have waken up to the need of incorporating learning in their M&E system expectations and that is a very good thing.

The approach you seem to have chosen would work for me too: I think it’s our honest duty to question our practices and to keep asking ‘so what?’ until we’ve reached the bottom of an argument/issue.

One thing though is: I don’t think everything ought to be ‘measured’ like we don’t really measure our relationships but we assess them in more subjective but also more integral (as in more genuine to our personality) ways.

The paper on which this post is based is really coming along and fingers crosses should come out in March… We will certainly be blogging more about it soon!

Thanking you again!